A new sensor inspired by the retina—the layer of cells in human and animal eyes that detects light and transmits signals to the brain—could improve the safety and performance of self-driving cars, robotics and high-speed autonomous drones.

The advance, created by a multi-institution team led by Akhilesh Jaiswal, an assistant professor of electrical and computer engineering at the University of Wisconsin-Madison, appears in the September 2023 issue of the journal Frontiers in Neuroscience.

Akhilesh Jaiswal

Akhilesh Jaiswal

Jaiswal says he got the idea for the sensor after reading a news article about recent discoveries in human vision. “It said that the eyes are smarter than what scientists believed,” he remembers. “That just stayed with me for a while. And I thought, well, if you can put that kind of smartness into cameras, it would be good.”

Digging into the literature about the retina, Jaiswal found the biology frustrating. So, in a late-night email, he reached out to Gregory Schwartz in the Department of Ophthalmology at Northwestern University to see if he would like to collaborate on the project. Schwartz, an expert in retinal computation, agreed, and the IRIS (Integrated Retinal functionality in Image Sensors) Project was born. The team added more experts, including Ajey Jacob, a semiconductor designer with the Information Sciences Institute at the University of Southern California, and Maryam Parsa, a bio-inspired algorithm expert at George Mason University.

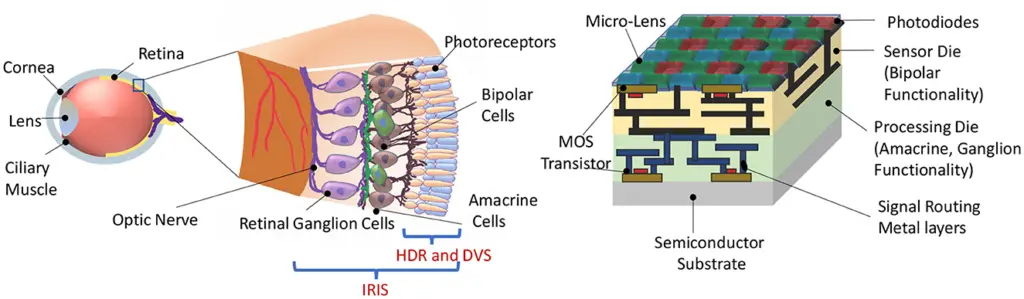

Until recently, researchers believed that retinas and conventional image sensors worked in a similar way. A layer of cells, or pixels, detects light and does some minor filtering before transmitting that information to the brain, or central processing unit, where all of the processing takes place. However, in the past decade, researchers identified rich computations performed by layers of specialized cells in the retina, beneath the light-detecting layer. Those layers process some information almost instantly—no brain needed. In fact, the number of specialized cells suggests that the retina can extract tens of different features simultaneously from the field of vision, allowing people to dodge a ball, detect a predator giving chase and achieve many other skills related to survival without really thinking about it.

“Evolution solved the problem of high-speed navigation with sophisticated pre-processing in the retina,” says Schwartz. “We believe that the next generation of autonomous vehicles needs to incorporate retina-inspired computations at the level of the hardware of the sensor.”

For IRIS, Jaiswal and his team decided to focus on two critical retinal features. Object motion sensitivity identifies moving objects against a moving background, like when you’re driving and you notice an ambulance speeding up behind you. Looming detection is a retinal ability to quickly identify an object that is approaching and may represent danger. Both of these retinal perceptions can improve the safety of autonomous vehicles or drones.

The new sensor designed by the IRIS project is directly inspired by recent findings on how human and animal retinas pre-process visual information.

The new sensor designed by the IRIS project is directly inspired by recent findings on how human and animal retinas pre-process visual information.

To add these features to a camera, the team proposed a new type of sensor using cutting-edge 3D chip-stacking techniques. The first chip uses dynamic pixels, which respond to light intensity changes. Below that is another chip, which conducts preprocessing computations akin to the layers of retinal cells in the eye. They allow a car or drone to react before the information even reaches the central processing unit. “IRIS offers immense potential for high-speed, robust, energy-efficient, and real-time decision-making in resource/bandwidth-constrained environments,” says Parsa.

The team designed and simulated the sensor using commercial process design kits and is in discussions with semiconductor manufacturers about creating the first prototypes of the new sensors. Importantly, Jaiswal says, the sensor can be manufactured using current production techniques and components, meaning it could come to market sooner rather than later.

The design could also be a jumping off point for sensors that mimic other capabilities of the retina. “We are ushering in an era where cameras have the capability to see, capture and analyze the world just as the human eye does,” says Jacob, “unveiling previously unseen details and spearheading unprecedented advancements in vision science.”

Other UW-Madison authors include Zihan Yin. Other authors include Md Abdullah Al-Kaiser and Mark Camarena from the University of Southern California and Lamine Ousmane Camara from Northwestern University.

Top image credit: iStock