In that far, far away galaxy where rebel Jedi once fought the dark forces of a galactic empire, robots played an integral role in daily life. From Roomba-like floor machines roaming the Death Star’s corridors to utilitarian spacecraft mechanics like R2-D2 and BB-8 to humanlike androids the likes of C-3PO and the lesser known surgical android 2-1B, the robots of Star Wars relied on robust artificial intelligence and varying degrees of dexterity to perform their tasks.

They’re also, obviously, the product of science fiction.

But here, planted firmly in the reality of planet Earth, artificial intelligence is rapidly transforming our digital lives. Certainly robots are quickly becoming more intelligent, thanks to supercomputing and machine learning—but, apart from the machines that perform single or highly repetitive tasks, robots as they’ve largely been imagined in science fiction are still mostly absent in our physical lives. Indeed, robots’ ascendance from the floors of our factories and homes to our surgery theaters and other settings that require higher and more complex functions so far has been halting.

That’s largely because engineers must overcome enormous challenges to control the precise physical movements of machines.

Mechanical Engineering Associate Professor Michael Zinn (on left) is in the midst of exploring several lines of human-centered robotics research. One of these research areas involves refining the haptics, or touch interactions, that can make virtual reality experiences more “real.” Photo: Renee Meiller.

Mechanical Engineering Associate Professor Michael Zinn (on left) is in the midst of exploring several lines of human-centered robotics research. One of these research areas involves refining the haptics, or touch interactions, that can make virtual reality experiences more “real.” Photo: Renee Meiller.

Developing human-centered robots—machines whose physical interactions with humans are every bit as precise and complex as their intellectual interactions—is especially daunting, and mechanical engineers Michael Zinn and Peter Adamczyk are among engineers all over the world who are working on the task. Their research largely focuses on robotics and ranges from fundamental control problems to robotic rehabilitation and other healthcare applications.

Making the virtual more realistic

Zinn, an associate professor of mechanical engineering, is in the midst of exploring several lines of human-centered robotics research. Zinn’s research spans a range of fundamental inquiries and development of specific devices; for example, he’s helped design robotic catheters and robotic motor skills assessment systems for autistic children. Zinn heads the UW-Madison REACH (Robotics Engineering, Applied Controls and Haptics) Lab, where he spends a lot of time devising and testing control systems that allow humans to interact ever more intimately with robots and with virtual-robotic environments.

“Fundamentally, I’m kind of a nerd,” says Zinn, as he explains the immense challenge of designing a robotic system that can move with the precision, speed, dexterity and care that will be required if robots are to ever make the leap from single-skilled automatons relegated to their workstations to something more akin to a corporeal Siri. “It’s fun,” he adds. “The stuff I do is scientifically rigorous, but at the same time we’re building these electromechanical things that do what you want them to do. It’s empowering.”

One area that is receiving more and more attention in robotics-related research is the subfield of haptics, Zinn says. Within the context of robotics and systems control, haptics deals with interfaces and devices that are meant to allow people to interact physically with a virtual world. At its most basic level, think of a video game controller that vibrates as a way of representing intense physical movements within a game. Zinn is currently working on a haptics problem on behalf of a research firm that sells reality retail products. The ultimate goal is to design a control system that allows for much more varied and nuanced physical interactions between humans and a virtual environment. It’s an exceedingly tough nut to crack, and Zinn is working on fundamental problems.

“There are current devices that are supposed to accomplish this task, including gloves, devices that are essentially a 3D joystick and devices that look like little robots,” says Zinn. “All of them work okay to bad.”

The challenge is designing a device that can create plausible sensory effects at the extremes—like a hard tabletop or a soft robe. Right now, he’s working on a line of hybrid actuation devices, which are a combination of motors and passive systems that essentially work as brakes.

“The advantage of a brake in the system is: Imagine that I have a device, and I’m about to push on a surface and then I just lock the device with brakes. It’s going to be as stiff as that device structure is,” Zinn explains.

Brakes such as these could be useful for creating relatively stiff sensations for users. “I can achieve that stiffness with no power,” Zinn says. “But the brake can’t push back because it’s passive and its only function is to take energy out of the system. So, you have to pair it with active devices, and people have tried to do this with very limited success. The result is a very asymmetric system where you can get super high stiffness or nothing.”

The virtual reality company approached Zinn after reviewing his PhD research from years ago when Zinn was exploring the design of active devices that could help create some of the more intermediate haptic effects. That design pairs a large motor and a small motor as the active elements—a pairing that Zinn called “macro-mini” in his PhD work. The idea is that each of the motors specializes in different haptic sensations.

“The big motor is good at low frequency stuff, where it can push back really hard but can’t respond quickly,” Zinn explains. “Meanwhile the small motor’s good at responding quickly and can give you a good punch over a short duration. When you pair the two, you can span a larger range in terms of the speed of response.”

Mechanical Engineering Assistant Professor Peter Adamczyk and his students develop mechanical and robotic solutions for people with mobility issues. Adamczyk (pictured above) is designing a rehabilitation robot that looks a lot like a regular motor-controlled exercise bike. Photo: Renee Meiller.

Mechanical Engineering Assistant Professor Peter Adamczyk and his students develop mechanical and robotic solutions for people with mobility issues. Adamczyk (pictured above) is designing a rehabilitation robot that looks a lot like a regular motor-controlled exercise bike. Photo: Renee Meiller.

Zinn’s current device looks like joystick with only one degree of freedom. “Eventually you would want it to work in a 3D setting, but we’re working on some fundamental controls elements,” he says.

The relatively basic design features a knob that pivots above an axis with a series elastic actuator—essentially a fancy spring—attached to one side. The spring connects to a big gear head and then a motor and allows the device to produce a wider spectrum of forces.

“If I didn’t have this spring, the device could produce very high forces, but when I want it to produce zero forces it would be terrible,” Zinn says. He’s early in the process, but it’s these kinds of fundamental problems that get Zinn excited. He also collaborates with researchers in the Department of Computer Science who are working on other fundamental human-robot interaction problems.

Making strides in robotic rehab

Adamczyk, whose office door is about 6 feet from Zinn’s, came to UW-Madison as an assistant professor of mechanical engineering in 2015 and has since set up the UW BADGER Lab. BADGER stands for Biomechatronics, Assistive Devices, Gait Engineering and Rehabilitation. As the name suggests, Adamczyk and his students develop mechanical and robotic solutions for people with mobility issues. In particular, Adamczyk has focused on robotic foot prostheses and lower limb rehabilitation. Both of these goals require robotic elements that are able to sense and properly respond to human movements in real time.

The sensing portion is simpler than the physical response portion. For the limb rehabilitation research, Adamczyk uses wearable inertial sensors; they’re a combination of an accelerometer, for measuring acceleration, and a gyroscope, for measuring orientation. The sensors are placed on a person’s foot and can measure motions in minute detail. The data they collect are useful for informing robotic devices about the range of motion that is normal in a foot, as well as what types of motion are associated with acute injuries or chronic conditions. Adamczyk is leveraging these data for the complex task of building robots with dynamic control systems that are sophisticated enough to not only measure the motion in a person’s moving foot or leg, but to also respond to that motion in a way that helps the person move better. Despite, or perhaps because of, the complex nature of this task, Adamczyk relishes the challenge.

“I love the systems development part of robotics,” says Adamczyk. “It’s what I spend my free time dreaming about. I love mechanisms and control, and I love dreaming outside the box about how these systems can be useful to humanity.”

In his latest research, Adamczyk aims to develop a robotic system that is useful to people who need extensive leg rehabilitation. The target is stroke victims who have to completely relearn how to use their legs, but the system could be used for people with a wide range of ailments that require extensive leg rehab, including people with cerebral palsy and musculoskeletal injuries such as knee replacement.

Adamczyk cautions that the project is still young and nebulous, but he’s excited about its potential to fill a gap in robotics.

“People have done a lot of work on similar robotic applications for upper limbs,” Adamczyk says.

In particular, researchers have progressed fairly far in a core area of rehabilitation, where the patient tries to perform specific movements while encountering resistance. Traditionally, this resistance is provided via imprecise mechanical machines or highly trained—and expensive—physical therapists. The idea is that robotic systems could interact with a patient even more precisely than a human therapist and, by doing so, make rehabilitation more effective.

Upper limbs have received this type of research attention, but not so with legs, says Adamczyk, sniffing an opportunity.

“We’ve set out, with the understanding that there’s a research gap there, to design tasks that require some sort of volitional movement and strength on the part of the patient,” he says.

To accomplish this, Adamczyk is designing a rehabilitation robot that looks a lot like a regular motor-controlled exercise bike. But, instead of the person pedaling the bike, the bike pedals the person—and only when the person pushes on it with very specific forces under his or her feet. Adamczyk’s research aims to determine how those forces can best be used to retrain movement. In collaboration with Zinn, he hopes to develop a more advanced system that moves in multiple directions.

Refining robotics for the future

All of this is easier said than done. Zinn explains that a core issue with human-centered robotics going forward will be developing ways for robots to simulate lifelike movement that is imbued with complexity so subtle that researchers still are having difficulty translating it to the language of engineering and machines.

“A great example of this the task of picking a fresh peach off of a tree,” Zinn explains. “I could explain to you how to do it, and with a little bit of practice you would learn to squeeze it enough to decide if it’s ripe enough to pick, but not squeeze it so hard that you squish it. So, how can I describe that to a robot and make it do the same thing? Certainly, it’s not only a matter of precision. How do I describe what that physical interaction is so I can now, in a training environment for robots, achieve the desired result? We’re just getting started on that.”

~ ~ ~

‘Machine’ learning

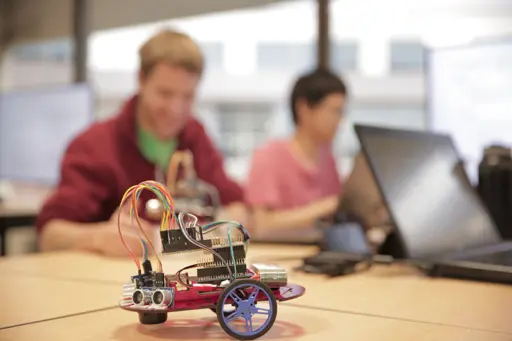

In addition to their robotics research, Zinn, Adamczyk and their colleagues teach courses (like the one pictured below) on robotics and control topics at both the undergraduate and graduate levels. In fall 2017, for example, Adamczyk taught Introduction to Robotics, a hands-on class for seniors and graduate students that introduces them to the key concepts and tools underpinning robotic systems in use and development today.