Today’s massive data centers have become a necessary evil in our information-driven lives. Here are a handful of ideas that can make them future-friendly.

In September 2024, tech giant Microsoft inked a power purchase agreement with Constellation Energy that involves restarting the Three Mile Island nuclear power plant, which was decommissioned in 2019. The deal will allow the company to purchase the entire generating capacity of the 835-megawatt plant over a 20-year period, likely starting in 2028.

It’s enough electricity to power 800,000 typical American homes—but Microsoft and other tech giants need that much juice to keep up with the skyrocketing growth and massive energy demands of data centers. These warehouse-like banks of “cloud” computers enable everything from online shopping, video chats and multiplayer video games to streaming services, social media platforms and, more recently, artificial intelligence products.

In fact, tech companies are beginning an unprecedented investment in data centers. In July 2024, Amazon announced $100 billion to build more than 200 data centers. In January 2025 alone, several top tech partners committed up to $500 billion to build out AI infrastructure, while Facebook parent company Meta announced it would spend $65 billion in 2025, following a roughly $40 billion investment in 2024.

However, as these data centers churn 24/7/365 and proliferate worldwide, they impose a major strain on the power grid and potable water supplies. They also produce enormous amounts of electronic waste and can cause noise pollution, lead to increases in local power costs, and spark conflicts over zoning and land use. Researchers are exploring engineering solutions to address these complex challenges while at the same time yielding new flexible, scalable paradigms for computing and energy management.

Powerful pivot

The most obvious concern with data centers is their electricity consumption. According to the accounting firm Deloitte, in 2025, data centers will use 2% of the world’s electricity, a figure set to double over the next decade. Other less rosy estimates claim data centers may require 21% of global electricity production by 2030. That’s why Microsoft and other tech giants are locking in electricity deals while they can. Yet, in December 2024, the North American Electric Reliability Corporation, an energy watchdog group, warned that the fast pace of AI data center connections to the electricity grid, along with other trends, could make power generation in the United States increasingly unreliable for the next decade.

For the last 20 years, data centers were pretty benign energy users. In fact, in the early days of the internet, many companies maintained their own on-site servers. As those companies slowly migrated their computing needs to centralized data centers, economies of scale also led to big efficiency gains. Case in point: Although internet traffic increased by 600% between 2015 and 2021, data center energy use only increased somewhere between 20% and 70%.

Today, the story is much different. “The easy gains are mostly exhausted at this point,” says Line Roald, an associate professor of electrical and computer engineering at UW-Madison who studies grid resiliency and optimization. “The AI boom is giving rise to whole new types of data centers that are expensive to build and use a lot of electricity. So, from the grid perspective, this is a lot of energy demand coming very quickly.”

Traditionally, tech companies have been pioneers in sustainable energy, signing substantial power-purchase agreements that have helped to increase the growth of wind, solar, geothermal and other green energy sources. But Roald says their burgeoning need for new power could turn that progress on its head, especially if the utilities that serve those companies opt for cheaper, quickly constructed natural gas-powered plants to boost energy production.

More positively, she says there’s an opportunity for companies to be leaders in rethinking how the system works. “Data centers have an incredible opportunity to potentially be flexible, work with the grid on how they can more directly source renewable energy, and adapt how much energy they are consuming,” she says. “It’s interesting to think about what could incentivize this type of flexible behavior. How could it be beneficial to data center owners to sometimes modulate their power use in response to, for example, the real-time cost of electricity or the availability of renewable energy? These are research topics we here at UW-Madison are quite actively thinking about.”

One emerging solution is co-location—siting data centers near energy sources, or vice versa. Instead of forcing local utilities to build new infrastructure—and possibly pass on costs to consumers—data centers would either own their own power source or sign a purchase agreement, similar to Microsoft’s with Constellation and Three Mile Island.

Ben Lindley, an assistant professor in nuclear engineering and engineering physics at UW-Madison, says that this type of arrangement makes sense for data centers, and that the next generation of nuclear reactors and small modular reactors is ideal for the industry.

“Nuclear power is a pretty good option for a data center, because it’s generated 24/7 and it’s reliable,” he says. “On top of that, it’s also a clean source of power—and many data center operators have made corporate commitments toward reducing emissions. They’re very interested in using nuclear power for that reason, and they’re also less vulnerable to things like fluctuations in natural gas prices.”

In fact, in October 2024, Google signed an agreement to purchase power from Kairos Energy, Amazon inked a deal with X-energy, and in January 2025, Sabey Data Centers, one of the largest data center developers, signed a deal with TerraPower, all of which are developing small modular nuclear reactors that could provide dedicated energy for large-scale data centers. It will likely be a decade or more before the new reactors will be deployed, but the investments may accelerate development of this emerging energy source.

Creative cooling

Energy consumption is just one data center concern. The warehouse-like buildings contain racks and racks of servers—the specialized computers that process, send and receive data. Large data centers can house 100,000 of those machines, while some data center complexes host up to a million of the machines on one campus.

Running 24/7, they generate a lot of heat—the enemy of electronics. Keeping those data warehouses cool takes a variety of technologies. The most common of those is using water as a liquid coolant to dissipate heat through evaporation. In many cases, that’s clean, potable water, which is more efficient and doesn’t corrode the cooling systems.

The impact, however, is huge. According to a study led by researchers at Virginia Tech, data centers are among the top-10 industrial water consumers, guzzling water from 90% of watersheds in the United States, including many highly stressed sites.

The good news is that tech companies—along with the communities that host their data centers—are experimenting with solutions, like siting data centers in naturally cool climates, or using smart technology to enable building systems to transfer some of the heavy lifting to cooler outdoor air.

One of the most promising innovations, however, is a closed-loop system that will use very little water. Rather than cooling an entire data center to a single ambient temperature, the closed loop system circulates chilled water to individual servers—cooling their chips directly. Water inside the system is recycled continuously, significantly reducing overall water use.

A new Microsoft data center currently under construction in Mount Pleasant, Wisconsin, will pilot this design. “The shift to the next-generation data centers is expected to help reduce our water usage effectiveness to near zero for each data center employing zero-water evaporation,” wrote Steve Solomon, VP of data center infrastructure engineering at Microsoft, in a post introducing the system in December 2024.

Microsoft is including the water saving systems in the design of all of its new data centers, says Solomon. It’s hoped this innovation will spur other data center developers to adopt similar technologies.

Evolving efficiency

Tweaking the servers themselves, as well as the software that runs them, also provides opportunities for improving energy efficiency. On average, a server lasts about seven years. Given the tens or hundreds of thousands housed in a single data center, retiring older servers and junking those with broken components quickly adds up to tons of annual e-waste.

Joshua San Miguel, an associate professor of electrical and computer engineering at UW-Madison who studies computer architecture and low-energy computer software, says there are emerging ways to get more life out of the servers. Extending the useful life of the machines even by a year or two could reduce the waste, energy and emissions baked into their manufacture.

Developing more flexible, less demanding software that can run on less powerful or older computers, as well as state-of-the art servers, could allow data centers to mix and match older machines and newer computers—scaling up for intensive computing tasks and scaling down for less demanding work as needed.

“Software developers have to figure out how to rewrite code to be able to adapt to this environment,” says San Miguel, who is researching the paradigm. “Hardware and software developers can then make their systems more flexible and still get the same performance. It’s almost invisible; you won’t even notice it’s slower, even though it’s actually running on older devices.”

In fact, says San Miguel, with the right algorithms, there may even be a place in data centers for broken or outmoded machines that still have enough resources to complete relatively mundane AI tasks, like classifying objects or summarizing email.

On a longer timescale, the development of next-generation semiconductors is key to improving data center efficiency. Current CPUs and GPUs, the processors that are the “brains” of computer systems, are made with billions of silicon transistors that flip on and off. Over time, chipmakers have found innovative ways to cram more and more transistors onto chips, increasing their power. But as these transistors near the size of atoms, they are approaching fundamental physical limits.

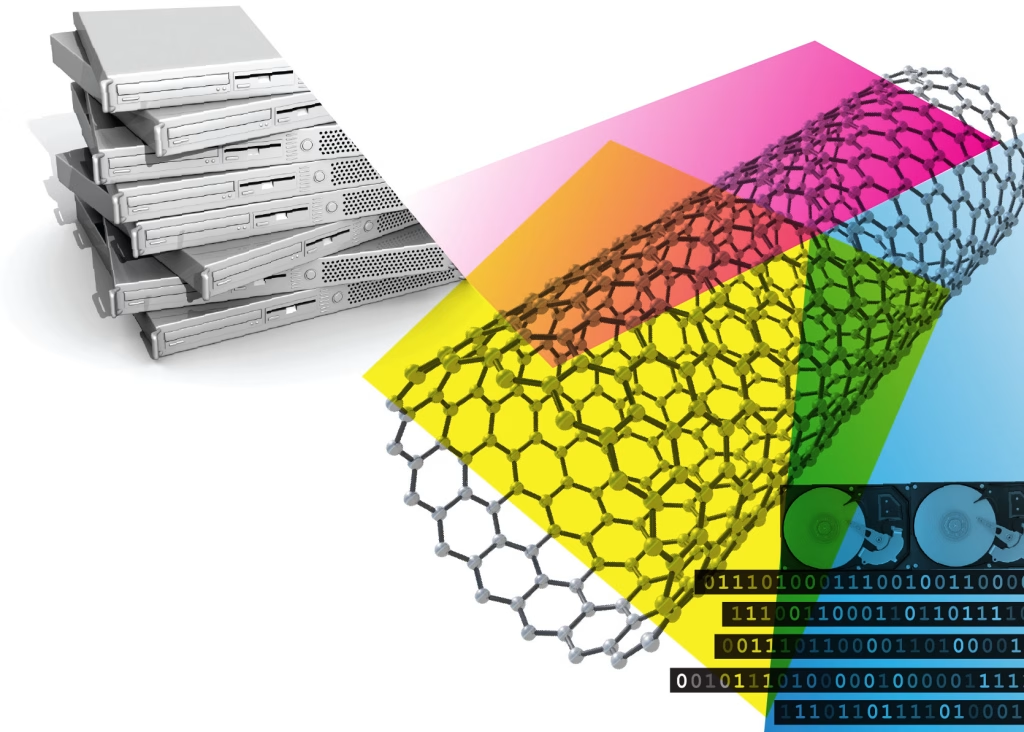

Michael Arnold, the Beckwith-Bascom Professor in materials science and engineering at UW-Madison, says next-generation semiconductor materials are needed to improve the computational power and energy efficiency of processors. Currently, a wide variety of candidates are being studied to massively improve computing power, speed and efficiency. Among them are ferroelectric, phase change, and 2D materials, as well as Arnold’s specialty, carbon nanotubes.

“If you can improve the semiconductors used in computer chips, you can have flexibility to either be more energy efficient or perform more computation per second,” says Arnold.

Either of those could have huge impacts on how data centers operate. One of the biggest gains, he says, could come from using these next-generation materials to tweak circuit design. Models show that making 3D chips—layering memory over the processor instead of making them as separate components—could improve the computing-per-power efficiency 1,000 times.

In little more than two decades, data centers—like the power grid or wastewater systems—have become essential, critical infrastructure. It’s likely their utility will only grow in the coming years. As a result, investing now in ways to improve data centers over time will not be wasted effort, says San Miguel. “Data centers are a fundamental creation that we will have to live with forever,” he says. “For efficiency purposes, it’s the best way to go.”